Brain signals translated into speech give paralyzed man back his words

Scientists at UC San Francisco have successfully developed a "speech neuroprosthesis" device that has enabled a man with severe paralysis to communicate in sentences, translating signals from his brain to text on a screen.

UCSF neurosurgeon Edward Chang, MD, has spent more than a decade developing a technology that allows people with paralysis to communicate even if they can't speak! Chang's achievement was developed in collaboration with the first participant of a clinical research study.

The research, presented as a study in the New England Journal of Medicine, is the first successful demonstration of direct decoding of complete words from brain waves of someone who cannot speak due to paralysis.

Brain activity translation to speech

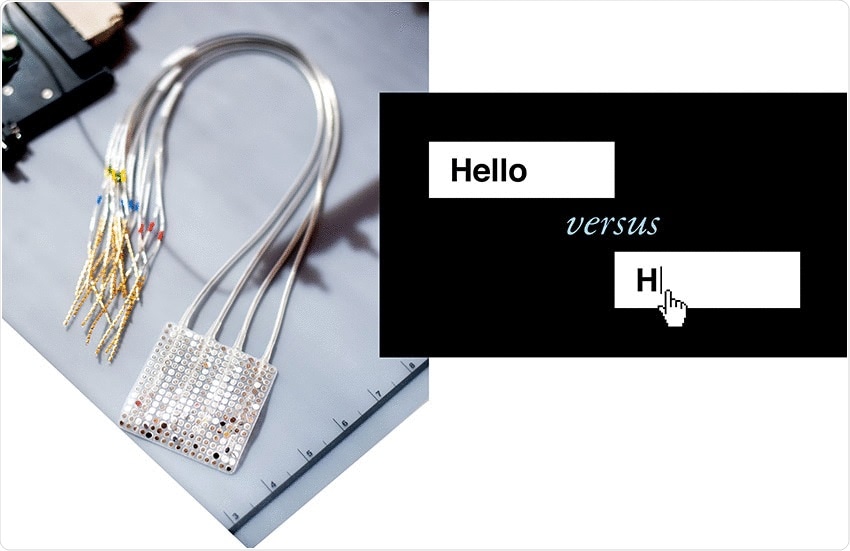

In the past, the field of communication neuroprosthetics focused on restoring communication via spelling-based methods, typing out letters one-by-one in text. In the current study, the researchers translate signals proposed to control muscles in the vocal system to speak words.

The team aims to provide paralyzed patients a way to communicate more naturally and in an organic way.

"With speech, we normally communicate information at a very high rate, up to 150 or 200 words per minute. Going straight to words, as we're doing here, has great advantages because it's closer to how we normally speak," Dr. Edward Chang, Joan and Sanford Weill Chair of Neurological Surgery at UCSF, noted.

Each year, thousands of people suffer from speech problems and the loss of their ability to speak due to strokes, accidents, or diseases. Using this method, patients and their families can one day be able to fully communicate.

The researchers implanted a subdural, high-density, multielectrode array over the area of the sensorimotor cortex, the part of the brain that controls speech in a person with anarthria or the loss of the ability to articulate speech.

After 48 sessions, the team recorded 22 hours of cortical activity while the study participant attempted to speak individual words from a 50-world vocabulary. Next, the team used deep-learning algorithms to develop computational models to detect and classify words based on the recorded brain activity patterns.

With the help of computational and natural language models, the researchers came up with next-word possibilities to decode the full sentences the participant is attempting to say.

To translate the patterns of recorded neural activity into specific intended words, the team utilized custom neural network models that use artificial intelligence.

Study findings

The team decoded sentences from the cortical activity of the study participant in real-time at an average rate of 15.2 words per minute. They also detected 98 percent of the attempts by the participant to produce words and classified words with 47.1 percent accuracy.

Also, the team's system was able to decode words from brain activity with up to 18 words per minute and 93 percent accuracy.

"In a person with anarthria and spastic quadriparesis caused by a brain-stem stroke, words and sentences were decoded directly from cortical activity during attempted speech with the use of deep-learning models and a natural-language model," the researchers concluded.

Overall, decoding performance was maintained or improved by accumulating large quantities of training data over time without daily recalibration, suggesting that high-density electrocorticography may be suitable for long-term direct-speech neuroprosthetic applications.

As the first study to facilitate communication this way, the study can potentially be applied to conversational situations. Currently, the team is working to increase the vocabulary. Future plans include expanding the study trial to include more participants.

- “Neuroprosthesis” Restores Words to Man with Paralysis – https://www.ucsf.edu/news/2021/07/420946/neuroprosthesis-restores-words-man-paralysis

- Moses, D., Metzger, S., Liu, J. et al. (2021). Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria. The New England Journal of Medicine. https://www.nejm.org/doi/full/10.1056/NEJMoa2027540

Posted in: Device / Technology News | Medical Research News | Medical Condition News

Tags: Artificial Intelligence, Brain, Cortex, Language, Medicine, Neurology, Paralysis, Quadriparesis, Research, Speech, Stroke, Surgery, Translation

Written by

Angela Betsaida B. Laguipo

Angela is a nurse by profession and a writer by heart. She graduated with honors (Cum Laude) for her Bachelor of Nursing degree at the University of Baguio, Philippines. She is currently completing her Master's Degree where she specialized in Maternal and Child Nursing and worked as a clinical instructor and educator in the School of Nursing at the University of Baguio.

Source: Read Full Article