Less is more: The efficient brain structural and dynamic organization

The human brain conducts high-capacity computations, but only requires a very low energy power of about 20 W, which is much lower than that of electronic computers. The neuronal connections in the brain network have a globally sparse but locally compact modular topological characteristics, which greatly reduces the total resource consumption for establishing the connections. The spikes of each neuron in the brain are sparse and irregular, and the clustered firing of the neuronal populations has a certain degree of synchronization, forming neural avalanches with scale-free characteristics, and the network can sensitively respond to external stimuli. However, it is still not clear how the brain structure and dynamic properties can self-organize to achieve their co-optimization in cost efficiency.

Recently, Junhao Liang and Changsong Zhou from Hong Kong Baptist University and Sheng-Jun Wang from Shaanxi Normal University tried to address this issue via a biological neural network model through large-scale numerical simulation, combined with a novel mean-field theory analysis. In their research article published in the National Science Review, they studied the excitation-inhibition balance neural dynamics model on the spatial network.

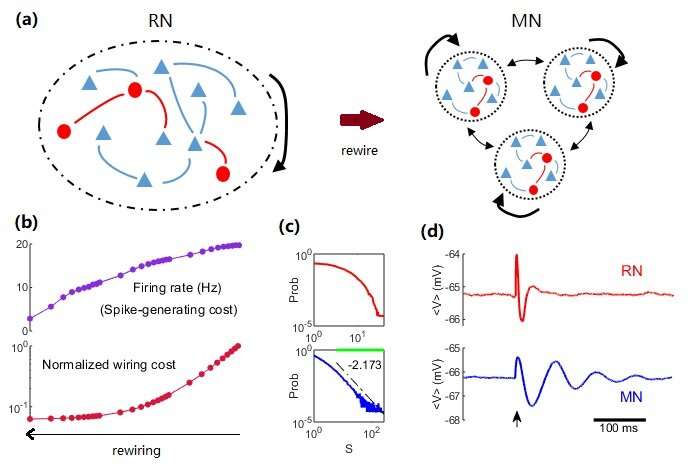

The research showed that when a globally sparse randomly connected network (RN) is rewired to a more biologically realistic modular network (MN), the network’s running consumption (neuron firing rate) and its building cost of connection are both significantly reduced; the dynamic mode of the network transitions to the region allowing scale-free avalanches (that is, criticality), which makes the network more sensitive in responding to external stimuli.

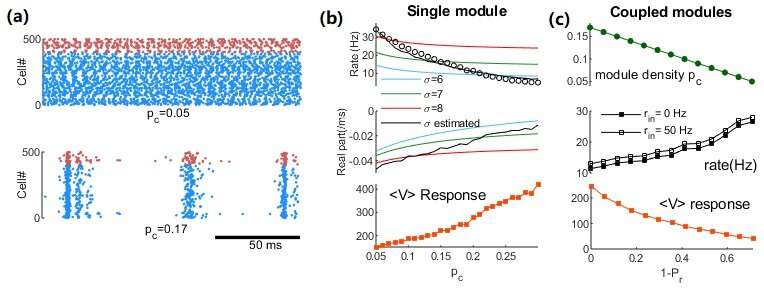

Further analysis found that the increased connection density of single modules during the rewiring process is key to account for the dynamical transitions: higher network topological correlation leads to higher dynamical correlation, which makes neurons to firing spikes more easily. Using a novel approximate mean-field theory, the authors derived the macroscopic field equations of a single module, revealing that the increase of module density causes the decrease of neural firing rate and the proximity to the Hopf bifurcation of the system. This explains the formation of critical avalanches and the increased sensitivity to external stimuli with lower firing cost. The coupled oscillator model obtained by coupling multiple modules further reveals the dynamic transition during the rewiring process of the original network.

The research clearly showed a principle of achieving a co-optimization of (rather than a trade-off between) the brain structural and dynamic properties, and offers a novel understanding of the cost-efficient operational principle of the brain, which also provides insights to the design of brain-inspired computational devices.

Source: Read Full Article